I taught Intro to CS for the third time last semester, and there are still individual assignments that I tweak or replace, both to explore what is possible and to keep the material interesting for myself. Having discovered Evan Peck‘s Ethical Engine assignment, I ran it as a three hour lab during one of the last weeks of the semester. I thought it might be useful to others to see the process I went through adapting the assignment. All code is available on GitHub.

Background

The basic premise for this assignment is that, as self-driving cars become more common, the AI will have to start making life-or-death decisions, essentially turning the philosophical trolley problem “thought”-experiment into a highly-relevant real-world dilemma. Evan’s original assignment was based off of the Moral Machine webapp, and had two parts. First, students would write the decision procedure which would determine whether a self-driving car should save its passengers or the pedestrians. Once they are done, students would write code to “audit” a blackbox decision procedure, to see whether it treated people of different genders, ages, social statuses, etc. equally. The idea is to provide a concrete example of the real life consequences of code, and that code audits may expose algorithmic bias.

Planning

Thanks to Evan putting his work on Github, I could have used the assignment as is. Evan’s write up did not describe how he used the assignment, however, and I wasn’t sure it would be appropriate for a three-hour pair-programmed lab. Mulling through his materials, I realized that my goals were slightly different. One key point that was missing from the assignment is that our values may not translate perfectly into code – that is, even if our intended algorithm was entirely “ethical”, our buggy implementation of that algorithm may not be. And this is without getting into how people may not agree on what is “ethical” in the first place, and that good intentions may not be sufficient.

To push students on these points, I partially deemphasized the role of students as code auditors, instead giving students more time to examine their own decision procedures. The lab broke down in three parts:

-

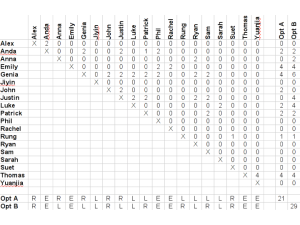

First, in pairs, students had to manually work through sixty randomly-generated trolley problems. Making the decisions as a group makes a discussion of the underlying ethical principles more likely, but the nature of the trolley problem also necessitated a content warning. Once students have gone through all sixty scenarios, they are presented with the statistics of their decisions. Both the scenario generator and the statistics calculation were taking from Evan’s code.

-

The next part of the lab is for students to translate their own decision procedure into code. This is the same as the first part of Evan’s lab. Building on the new introduction, however, I then asked students to use the same sixty problems from the first part of the lab to compare their algorithmic decisions against their manual ones (their decisions were logged to a file). The provided code not only allowed students to compare the statistical summaries, but also specific cases, so students can figure out why their code behaved differently than they did.

-

Finally, I asked students to reflect on the lab with the following questions:

- Explain the reasoning behind your decision function.

- How accurately did your automatic model match up with your manual decisions? Modify and run

find_difference.py to help you identify specific scenarios where your decisions differed.

- Looking at the statistics over 100,000 scenarios, are there priorities that do not match what you had intended? How do you think they came about?

- Compare your statistics from the previous question to those from another group. Which decision process would you rather a self-driving have? Why?

- Based on this exercise, what are some challenges to building (and programming) ethical self-driving cars?

Reflection

Reading the evaluations on the lab, the students generally found the assignment meaningful. Some students noted that the subject matter was heavy and somber, but still found it thought provoking. For example, one student wrote “While it was kind of dark deciding who lived and who died, I thought this lab was fun and interesting.” The point of the groupwork was also noted by a different student: “It was interesting to see the results […] and comparing them to the results of other groups to see what their thought process was.” I should note that the lab was also situated in a week of lectures around AI and society, including data privacy, automation and job loss, and tech legislation. These additional lectures provided more room for discussion, and the week served as a big-picture conclusion for the semester.

The most positive unexpected outcome of the lab, however, was one student who wanted to push the discussion of ethics much further. They wrote:

Ok this lab was interesting but the amount of really fucked up shit I heard was extraordinary. I think after this lab we should have some reading on body positive movements that originated out of feminism and how size is not always tied to health. In addition, I think we should have reading or discussion on how Black and Brown communities are more often criminalized. Also, I think it would be cool if we have some discussion on saving “disenfranchised” individuals – like woman – and how that would be determined. For instance why would a woman as a disenfranchised individual be prioritized but not a person who is overweight. Even if we don’t have these discussions, maybe we could have them written in our questions.

This student was referring to the attributes that were used in the scenario generator. I did not notice the conversations that the student overheard, but looking at the submitted code confirmed that some groups prioritized passengers/pedestrians who were “athletic” over those “overweight”, or the children over the elderly. I reached out to this student by email, and they also pointed out how “homeless” and “criminal” should not be considered professions. The student continued,

The use of body type in the code, as well – it threw me off. I felt very uncomfortable overhearing my peers casually debating whether someone was more valuable based on their size. […] In some ways, I thought having that be part of the description really allowed me to be critical of all the categories and allowed me to think about why that description might be included.

In retrospect, this is a lesson I should have learned already. At SIGCSE 2017, I led a Birds of a Feather (BoF) on Weaving Diversity and Inclusion into CS Content. One of the points raised in the discussion was that content on social inequality must be approached sensitively, as some students may be going through those exact problems in their personal lives. This was why I included a content warning for the lab, but this student’s comments made me realize I must be more aware of even the details in the future.

Talking with this student also made me realize that my existing discussion questions were inadequate. None of them directly probed at what should be considered ethical, which is extremely ironic given the content. I was galvanized to rewrite the questions entirely (I am in the middle of refactoring the code, so some functions may not exist on Github yet):

-

Consider your manual decision making process.

- Are there attributes of the passengers and pedestrians that had a higher (or lower) survival rate, but that was not a conscious factor in your decision process?

- What attributes went into your decisions? Does that attribute positively or negatively affect that person’s survival? Why did you consider those attributes?

- Is the use of those attributes to make those decision “fair” or “ethical” or “moral”? Why/Why not?

-

Change the last lines of automatic.py to call compare_manual. Running automatic.py now will run your function on the same 60 scenarios you manually worked through, and show you the scenarios where your automatic and manual decisions differed, as well as the statistics for your automatic decisions. Answer the following questions:

- How accurately did your automatic model match up with your manual decisions?

- For each scenario where your manual and automatic decisions disagreed, explain why. What were you considering when you made the decision manually? What did the automatic decision not take into account, or what did it take into account that it shouldn’t?

-

Change the last lines of automatic.py to run on 100,000 random scenarios. Include these statistics in your submission. Compare the simulated rates of being saved within each attribute (age, gender, etc.). Assign the attribute into one of these categories:

- Category 1: Deliberately used in decision, and the survival rates reflect what you intended

- Category 2: Deliberately used in decision, but the survival rates do not reflect what you intended

- Category 3: Not explicitly used in decision, but the survival rates are not equal between groups

- Category 4: Not explicitly used in decision, and the survival rates are equal between groups

For every attribute in Category 2, explain why the statistics do not reflect your (explicit) intented decision process. For every attribute in Category 3, explain why the survival rates are not equal despite not being used in your decision process.

-

Working with another group, explain to each other how you made your automatic decisions and compare your statistics from the previous question.

- How do the decision processes differ? What was the reasoning behind each difference?

- Did that lead to different statistics, in either ranking or in survival rate?

- Which decision process would you prefer to be in a self-driving car? Why?

-

Based on this exercise, what are some challenges to building (and programming) ethical self-driving cars?

Conclusion

Although my goals for the lab were achieved, it was clear that the assignment had a lot of potential that I didn’t tap into. Several people have noted that ethics must be incorporated into existing CS classes, and while there are some resources out there, much of it is still to be developed and refined. I could not have create this lab without Evan Peck for sharing his assignment, and I hope this writeup will be useful to others as well.

Closing on a tangential point: a recent Chronicle article was titled What Professors Can Learn About Teaching From Their Students (paywalled). The article was about students formally observing a classroom, but I have found that even informal feedback can be extremely useful. I urge other instructors to reach out to their students for comments and suggestions, especially for newly developed material.